Technological Activity

Research activities will focus on processes aimed at naturalizing Human-Robot Interaction (HRI). Specifically, we will study and implement anthropomorphic interaction models on robotic platforms, primarily humanoids.

Software Architecture and Cognitive Functions

In this context, we intend to develop a software framework based on ROS (Robot Operating System). This framework will integrate high-level cognitive functions designed to facilitate the implementation and rigorous testing of the developed interaction paradigms.

Vocal Interaction and Generative AI

Recognizing vocal interaction as one of the most intuitive communication modalities, the group will concentrate on developing advanced dialogue models. These will be based on the latest advancements in Generative Artificial Intelligence, specifically leveraging the potential of Large Language Models (LLMs).

Multimodal Approach and Internal Language

Human interaction is intrinsically multimodal: although verbal language is central, non-verbal information, such as gestures, is often critical for the correct interpretation of communicative intent.

To manage this complexity, the activity involves the study and implementation of an internal interaction language (an intermediate layer). This language will mediate between the conversational system (LLM) and the robot’s actuation systems, ensuring the ability to encode and synchronize the multimodal aspects of communication (speech, gesture, prosody).

Non-Verbal Comprehension and Empathy

A fundamental research axis will be the interpretation of the human interlocutor’s non-verbal cues. Leveraging 2D and 3D sensory streams, we will study:

Gestures and body language.

Postural attitudes.

The broader aspects of metacommunication.

The interpretation of these non-verbal signals will be integrated with natural language processing. The effective integration of these channels is a fundamental prerequisite for developing empathic abilities in the robot, allowing it to adapt its response to the user’s emotional and situational context.

Expressive and Deictic Capabilities

Concurrently, work will focus on the robot’s expressive capabilities, such as vocal prosody (the tone, rhythm, and emphasis used during interlocution). Additional expressive modalities (e.g., facial expressions, gestures) will be implemented based on the specific affordances of the hardware platform used.

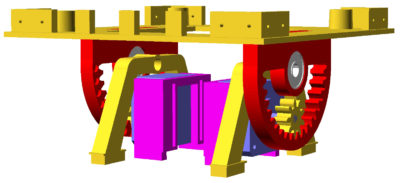

Another defining aspect will be the implementation of deictic capabilities (or indicative), namely the robot’s ability to physically point to objects in the environment to refer to them verbally (e.g., “Get that one”). This function, which is complex to achieve, requires closed-loop control that integrates gestural pointing with effective object recognition and localization systems.

Hardware Development and Prototyping

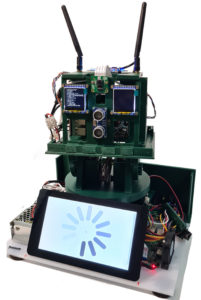

The group is also committed to designing and building an original robotic hardware platform, aiming to overcome the performance and economic limitations of current low-cost solutions.

In support, cyber-physical systems (CPS) will be developed, conceived both as auxiliary devices to be integrated into the robot (e.g., advanced sensor suites) and as systems endowed with stand-alone intelligence.

Finally, we will proceed with the engineering of a complete robotic prototype, which already exists and is functional at a laboratory level. The ultimate goal is the transition from this prototype to derived products ready for potential industrialization.

Goals

he objective is to implement novel anthropomorphic models for Human-Robot Interaction (HRI) to naturalize the interaction, potentially utilizing cyber-physical systems (CPS) to enrich the robot’s perceptive and expressive capabilities.

The overall objective is composed of the following partial, yet self-consistent, sub-objectives:

Vocal Interaction: In close collaboration with colleagues specializing in Natural Language Processing (NLP), we will define and implement an internal interaction language (intermediate layer) between the conversational system and the robot. This language will be designed to encode the multimodal aspects of communication.

Gesture Interpretation: Implementation of algorithms for interpreting the interlocutor’s postures and gestures. This component is essential for the preceding objective, as it will enable the system to respond to deictic requests (e.g., “Tell me about that object”) based purely on indication.

Robot Expressiveness: Development of expressive capabilities coherent with the interaction, including vocal prosody (tone of voice), postural aspects, and facial expressiveness.

Deictic (Pointing) Capabilities: Implementation of indicative abilities, specifically through laser-based pointing.

Empathy: Realization of empathic capabilities. This includes, for example, the ability to comprehend facial expressions or, more generally, the interlocutor’s mood.

Application Fields

The main application domains are:

Education: Where robot-child interaction can support pedagogical methods.

Cultural Heritage: Specifically, museum assistance, where deictic (pointing) interpretation capabilities would constitute an innovation for this field.

Medicine: Particularly in post-hospitalization

ANTONIO MESSINA

PIETRO STORNIOLO

Research product

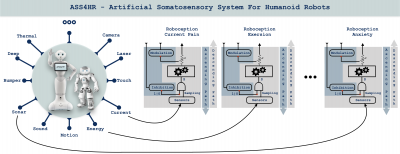

ASS4HR ASS4HR is both a design and a ROS (Robot Operating System) implementation of an artificial somatosensory system for a humanoid robot. The design takes inspiration from the biological model and has been adapted to the robot’s characteristics. The implementation is based on the use of the soft sensors paradigm.

ASS4HR ASS4HR is both a design and a ROS (Robot Operating System) implementation of an artificial somatosensory system for a humanoid robot. The design takes inspiration from the biological model and has been adapted to the robot’s characteristics. The implementation is based on the use of the soft sensors paradigm.

ASS4HR allows the robot to experience sensations that we call “roboceptions”, exploiting from the measurements of the basic sensors it is equipped with. This way, the robot is aware of its physical condition and can adapt its behavior.

For each “roboception” that constitutes the artificial somatosensory system, both the ascending path and the descending path have been implemented. Therefore, ASS4HR allows you to experience, modulate or inhibit the “roboceptions”. By doing so, each robot can have its own character.

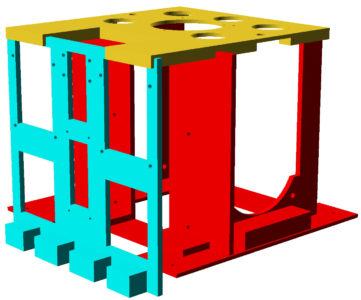

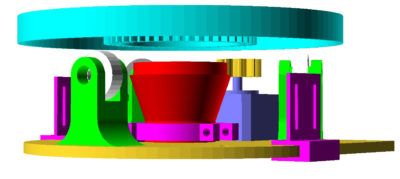

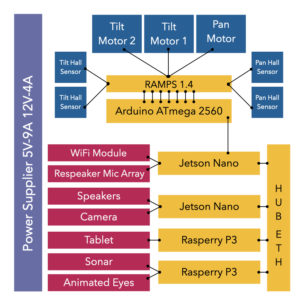

Q-ro Project

The idea of designing and building Q-ro, starting from scratch, allowed us to bring together many of the best features of robots already created in a single project. The project originates from the following considerations:

• Limitations: Low to medium-cost robots have numerous functional and technical-operational limitations;

• Accessibility of resources: commercial platforms such as Pepper and Nao, among the most popular today as social robots, do not allow full access to hardware resources and have a proprietary SDK;

• Costs: platforms with many features are expensive and cannot be used in small and medium-sized projects except as one-time prototypes. An experiment with dozens of robots costing tens of thousands of dollars/euros per robot is virtually unimaginable;

• Functionality: design and build a robot, which costs a few hundred dollars/euros, equipped with most of the functions of more expensive robots;

• Scalability: design and build a modular and scalable robot, which allows obtaining, from time to time, appropriate prototypes for specific applications